Real time Point Based Global Illumination

Introduction

Global illumination in real time is an important area of research today. Enlighten by Geomerics are the best in this domain. I will describe a technique less efficient but a good beginning which support dynamic lights and objects with mulitple bounces!

Going to the texture space

Like Enlighten, we want to dynamically update a lightmap texture. To do this, the first step is to generate a unique uv coordinate for each face of your scene a.k.a UV Unwrap.

Many differents techniques can be used here. we chose to implement it by planar projection described here. The idea is to project all faces on the dominant axis of the normal. After that, we pack each small texture into a big one with this article. That works ok but it would be better if each face had their neighbor into the texture space.

Of course you can simply use your favorite 3D modeler to do this stuff for you and maybe in a better way. For example, in blender: edit mode -> press "u" -> lightmap pack.

A better method is maybe using Ptex of Walt Disney Studios, but I didn't give it a try yet.

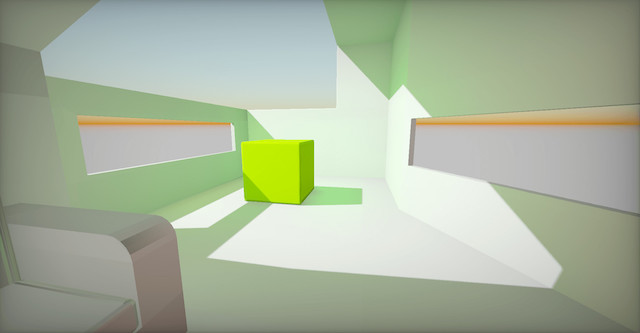

At this point, you must be able to generate a color for each face of your scene by coloring the lightmap (don't do that, it's just to be clear):

Getting the data of the scene

You can now directly write your scene in texture space and we want to get all the data of the scene in this space.

To do that, we use a multiple render targets to write simultaneously 3 small textures (like 128²) :

| ID | Type | R | G | B | A |

|---|---|---|---|---|---|

| 1 | RGBA32F | PositionX | PositionY | PositionZ | 1.f |

| 2 | RGB32F | NormalX | NormalY | NormalZ | |

| 3 | RGB8 | AlbedoR | AlbedoG | AlbedoB |

We just get the position, normal and color of each face.

Before drawing into these textures you must clear them with alpha to 0. The alpha channel is marked to 1 in the first one just to say "yes it's a real pixel where we have written some info", it will be useful later because you will have some empty space in your textures. If not, you are really lucky!

Vertex Shader :

#version 330

layout (location = 0) in vec3 position;

layout (location = 1) in vec3 normal;

layout (location = 2) in vec2 texcoord0;

layout (location = 3) in vec2 texcoord1; //Your lightmap uv computed before

smooth out vec3 pos, norm;

smooth out vec2 uv;

uniform mat4 modelMatrix;

uniform mat4 normalMatrix;

void main()

{

pos = (modelMatrix*vec4(position,1.)).xyz;

norm = (normalMatrix*vec4(normal,1.)).xyz;

uvTex = texcoord0;

gl_Position = vec4(texcoord1,0.,1.); //this is the trick, writing in the lightmap space

}Fragment Shader :

#version 330

smooth in vec3 pos, norm;

smooth in vec2 uv;

layout (location = 0) out vec4 buffer1;

layout (location = 1) out vec3 buffer2;

layout (location = 2) out vec3 buffer3;

uniform sampler2D albedo;

void main()

{

buffer1 = vec4(p, 1.);

buffer2 = norm;

buffer3 = texture(albedo, uv).rgb;

}You can get some troubles writing faces which have an area smaller than one pixel of your textures. This is a well known problem, check the article conservative rasterization by Nvidia. A simple geometry shader will help you to fix that.

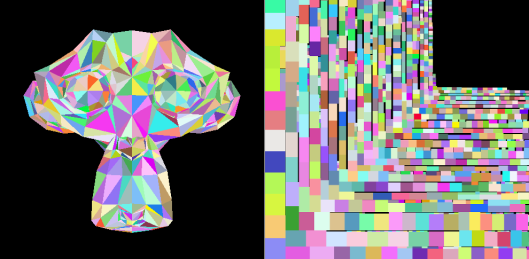

The output should be similar to this :

Note that if you have a dynamic object you just need to update the 3 textures only at the space of this object. It will be really fast.

Getting the data of your lights

We generate another one texture with the direct illumination (phong + shadow mapping works well) in texture space. You just need to draw a fullscreen quad and use the textures of position and normal to compute the lighting. You must update the direct illumination each time the lights and objects change but again, it's really cheap.

You will get something like this :

Computing the indirect lighting

You have now all the informations to compute the radiance.

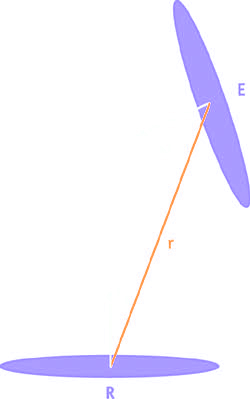

According to GPU Gems 2 - Dynamic Ambient Occlusion and Indirect Lighting by Michael Bunnell, I'm considering each pixel of my buffers like a surfel.

We have this relation between receiver and emitter elements:

(image taken from GPU Gems 2 - Chapter 14)

We can now find a formula of radiance transfer between two surfels and use a compute shader to generate our final texture of indirect lighting:

#version 430

uniform sampler2D position, direction, albedo, lighting;

uniform layout(rgba32f) image2D destTex;

const float PI = 3.14159265359;

layout (local_size_x = 16, local_size_y = 16) in;

struct Surfel

{

vec4 pos;

vec3 dir;

vec3 alb;

vec3 shad;

};

Surfel getSurfel(vec2 p)

{

Surfel s;

s.pos = texture(position, p);

s.dir = texture(direction, p).rgb;

s.alb = texture(albedo, p).rgb;

s.shad = texture(lighting, p).rgb;

return s;

}

float radiance( float cosE, float cosR, float a, float d) // Element to element radiance transfer

{

return a*( max(cosE,0.) * max(cosR,0.) ) / ( PI*d*d + a );

}

void main()

{

ivec2 texSize = textureSize(position, 0);

ivec2 storePos = ivec2(gl_GlobalInvocationID.xy);

vec2 p = vec2(storePos*2+1)/vec2(texSize*2.);

Surfel rec = getSurfel(p);

if(rec.pos.a==0.) //Are we on a real face ?

{

imageStore(destTex, storePos, vec4(0.));

return;

}

vec3 gi = vec3(0.);

for(int x = 0; x < 128; x++)

for(int y = 0; y < 128; y++)

{

//Little hack to get the center of the texel

vec2 p = vec2(float(x*2+1)/float(texSize.x*2.), float(y*2+1)/float(texSize.y*2.));

Surfel em = getSurfel( p ); //Get emitter info

if( em.pos.a == 0. ) //It is a real emitter ?

continue;

vec3 v = em.pos.xyz - rec.pos.xyz; // vector from the emitter to the receiver

float d = length(v) + 1e-16; //avoid 0 to the distance squared area

v /= d;

float cosE = dot( -v, em.dir.xyz );

float cosR = dot( v, rec.dir.xyz );

gi += radiance(cosE, cosR, 1./128.,d) * em.alb * em.shad;

}

vec4 col = vec4( rec.alb*rec.shad*gi , 1.);

imageStore(destTex, storePos, col);

}The result is the first bounce of indirect lighting. You can repeat the process as many times as you want by replacing the sampler of the direct lighting by your last GI buffer to get the others bounce.

Of course this pass is really slow. You can compute this in more than one frame by sampling only a few emitters each time. I get two bounces in ~200ms with a texture of 128² on a Nvidia 650M.

I interpolate the result in the time to get a smooth rendering between the new texture and the last one.

Result and source code

Here's some of my results, and the comparaison between with and without global illumination :

The source code of the demo is available here.

Optimisation

To avoid the O(n²) complexity, you maybe can generate a quad tree of the geometry to sample only the surfels which are close to your receiver element. Of course you will need to update this tree each time you move an object but I don't know if it's possible in real time. It seems to be the solution of Geomerics, they precompute a structure that allows them to get the visibility of each element and their distance with the others but all the geometry must be static.

Greetz

The application was made with Muhammad Daoud for a school project at the University of Luminy.

The great model of the scene is made by Sylvain Bernard a.k.a Mestaty, thanks mate (sorry I didn't take the final version..)!